NVIDIA AI Researchers Explore Upcycling Large Language Models into Sparse Mixture-of-Experts

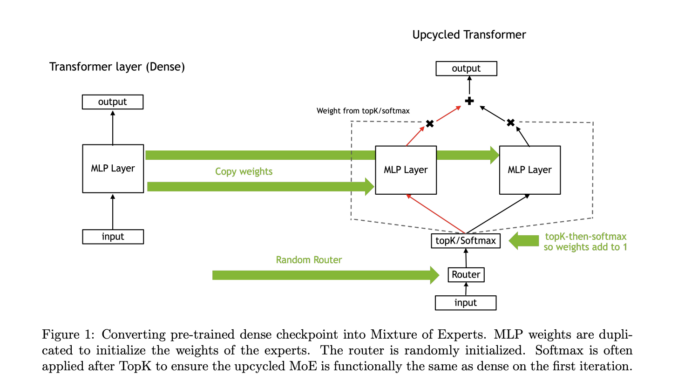

Mixture of Experts (MoE) models are becoming critical in advancing AI, particularly in natural language processing. MoE architectures differ from traditional dense models by selectively activating subsets of specialized expert networks for each input. This […]