The Need for Efficient On-Device Language Models

Large language models have become integral to AI systems, enabling tasks like multilingual translation, virtual assistance, and automated reasoning through transformer-based architectures. While highly capable, these models are typically large, requiring powerful cloud infrastructure for training and inference. This reliance leads to latency, high costs, and privacy concerns, limiting their deployment on resource-constrained edge devices. Models like GPT and LLaMA, with billions of parameters, cannot efficiently run on local hardware due to their size and the complexity of their training and inference processes. Moreover, their dependence on massive datasets and high-performance GPUs makes them unsuitable for mobile or embedded environments. To overcome these challenges, there is a growing need for lightweight, efficient models that can perform well locally without sacrificing reasoning and context-handling capabilities.

Limitations of Existing Solutions

Several methods have been explored to address these challenges. Sparse attention mechanisms, such as NSA and MoBA, aim to reduce memory consumption; however, they either fall short in decoding efficiency or introduce significant architectural overhead. For data handling, previous methods have leaned on large-scale web scraping, resulting in noisy and unstructured corpora. Filtering methods have included fastText classifiers and manual curation, which either lack depth or scalability. On the training side, frameworks such as StepLaw have been used to optimize hyperparameters based on predictable scaling laws; however, they often require extensive experimentation and GPU cycles, creating a barrier to entry. Inference optimizations, such as FlashAttention, reduce computational complexity but still fall short of delivering the speeds required for real-time applications on edge devices.

Introducing MiniCPM4: Efficient Architecture, Data, and Inference

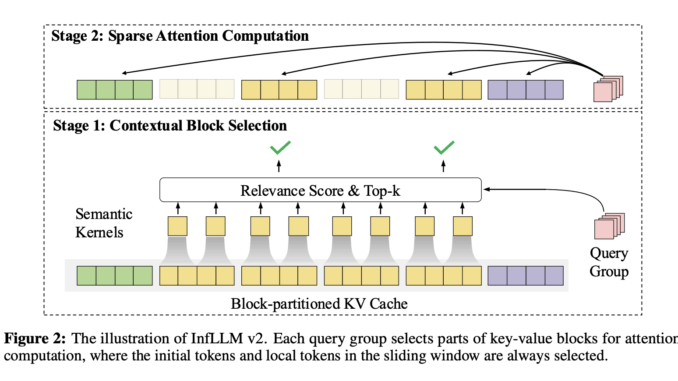

Researchers from OpenBMB introduced MiniCPM4, a suite of highly efficient large language models designed specifically for on-device deployment. The development includes two variants: one with 0.5 billion parameters and another with 8 billion. The model was built with improvements in four core dimensions: model architecture, training data, training algorithm, and inference systems. For architecture, the team introduced InfLLM v2, a sparse attention mechanism that accelerates both prefilling and decoding without sacrificing context comprehension. On the data front, UltraClean was employed to generate and filter training datasets, enabling the use of just 8 trillion training tokens compared to the 36 trillion used by competitive models like Qwen3-8 B. ModelTunnel v2 guided the training process with efficient hyperparameter tuning, and CPM.cu handled inference with platform-agnostic CUDA-based execution.

Technical Innovations in MiniCPM4

MiniCPM4’s tech stack is designed to strike a balance between performance and resource utilization. InfLLM v2 partitions key-value caches into blocks and selects top-K relevant blocks using semantic kernels for attention, reducing attention computation by 60% compared to NSA. Its dynamic context block selection and token-level query group processing allow it to support sequences up to 128K tokens while maintaining speed and coherence. UltraClean relies on efficient data verification, utilizing a pre-trained LLM and annealing-based fine-tuning on 10 billion tokens. This results in higher-quality datasets, UltraFineWeb in English and UltraFineWeb-zh in Chinese, which outperform FineWeb by 3.61 and 1.98 percentage points, respectively, in average benchmark performance. UltraChat v2 further supports post-training by generating reasoning-rich, multi-turn dialogues.

Benchmark Performance and Speed Gains

In terms of raw performance, the 8B version achieved MMLU scores of 32.24%, outperforming FineWeb (28.84%) and FineWeb-edu (31.80%). On ARC-C and ARC-E, it scored 35.67% and 70.62% respectively, surpassing competing datasets by over 10 percentage points. Compared to Qwen3-8B, MiniCPM4 used only 22% of the training data yet delivered a 7-fold increase in inference speed on 128 K-length documents when tested on end-side GPUs like Jetson AGX Orin and RTX 4090. The average decoding speed reached over 200 tokens/s for long-context inputs, and the architecture degraded gracefully to dense attention for shorter sequences. Additionally, the use of BitCPM4 enabled quantization-aware training, allowing deployment on devices with even stricter memory constraints without losing performance fidelity.

Key Takeaways from MiniCPM4:

MiniCPM4 comes in 0.5B and 8B parameter sizes, optimized for edge devices.

It utilized only 8 trillion training tokens, versus 36 trillion by Qwen3-8 B.

It achieved 7x faster processing of 128 K-length documents compared to Qwen3-8 B.

InfLLM v2 reduced attention computation costs by 60% using block-level attention.

UltraFineWeb outperformed FineWeb by 3.61% (English) and 1.98% (Chinese) on benchmarks.

Reached 35.67% on ARC-C, 70.62% on ARC-E, and 32.24% on MMLU, exceeding prior datasets.

BitCPM4 enabled ternary LLMs suitable for extremely constrained hardware.

CPM.cu inference system combined CUDA optimization with speculative sampling.

UltraChat v2 enabled enhanced fine-tuning with reasoning-intensive dialogue generation.

ModelTunnel v2 used ScalingBench for precise hyperparameter tuning, increasing training efficiency.

Conclusion: Efficient LLMs for Edge AI Applications

In conclusion, the comprehensive approach taken by the MiniCPM4 team addressed all key inefficiencies associated with current LLMs. By introducing novel architectural, training, and deployment strategies, the model maintains high-quality responses, supports long-context comprehension, and performs well under edge constraints. The success of this work extends beyond raw metrics to demonstrate that state-of-the-art performance is achievable outside the cloud. It enables new application domains, such as secure offline assistants, real-time mobile AI, and autonomous embedded systems, without the traditional computational burden.

Check out the Paper, Model on Hugging Face and GitHub Page. All credit for this research goes to the researchers of this project. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment