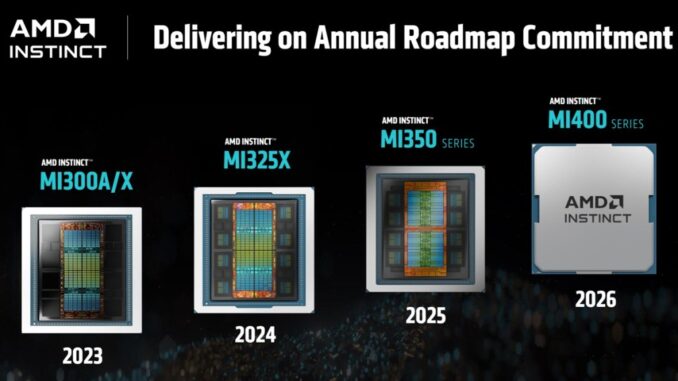

AMD unveiled its comprehensive end-to-end integrated AI platform vision and introduced its open, scalable rack-scale AI infrastructure built on industry standards at its annual Advancing AI event.

The Santa Clara, California-based chip maker announced its new AMD Instinct MI350 Series accelerators, which are four times faster on AI compute and 35 times faster on inferencing than prior chips.

AMD and its partners showcased AMD Instinct-based products and the continued growth of the AMD ROCm ecosystem. It also showed its powerful, new, open rack-scale designs and roadmap that bring leadership Rack Scale AI performance beyond 2027.

“We can now say we are at the inference inflection point, and it will be the driver,” said Lisa Su, CEO of AMD, in a keynote at the Advancing AI event.

In closing, in a jab at Nvidia, she said, “The future of AI will not be built by any one company or within a closed system. It will be shaped by open collaboration across the industry with everyone bringing their best ideas.”

AMD unveiled the Instinct MI350 Series GPUs, setting a new benchmark for performance, efficiency and scalability in generative AI and high-performance computing. The MI350 Series, consisting of both Instinct MI350X and MI355X GPUs and platforms, delivers a four times generation-on-generation AI compute increase and a 35 times generational leap in inferencing, paving the way for transformative AI solutions across industries.

“We are tremendously excited about the work you are doing at AMD,” said Sam Altman, CEO of Open AI, on stage with Lisa Su.

He said he couldn’t believe it when he heard about the specs for MI350 from AMD, and he was grateful that AMD took his company’s feedback.

AMD demonstrated end-to-end, open-standards rack-scale AI infrastructure—already rolling out with AMD Instinct MI350 Series accelerators, 5th Gen AMD Epyc processors and AMD Pensando Pollara network interface cards (NICs) in hyperscaler deployments such as Oracle Cloud Infrastructure (OCI) and set for broad availability in 2H 2025. AMD also previewed its next generation AI rack called Helios.

It will be built on the next-generation AMD Instinct MI400 Series GPUs, the Zen 6-based AMD Epyc Venice CPUs and AMD Pensando Vulcano NICs.

“I think they are targeting a different type of customer than Nvidia,” said Ben Bajarin, analyst at Creative Strategies, in a message to GamesBeat. “Specifically I think they see the neocloud opportunity and a whole host of tier two and tier three clouds and the on-premise enterprise deployments.”

Bajarin added, “We are bullish on the shift to full rack deployment systems and that is where Helios fits in which will align with Rubin timing. But as the market shifts to inference, which we are just at the start with, AMD is well positioned to compete to capture share. I also think, there are lots of customers out there who will value AMD’s TCO where right now Nvidia may be overkill for their workloads. So that is area to watch, which again gets back to who the right customer is for AMD and it might be a very different customer profile than the customer for Nvidia.”

The latest version of the AMD open-source AI software stack, ROCm 7, is engineered to meet the growing demands of generative AI and high-performance computing workloads— while dramatically improving developer experience across the board. (Radeon Open Compute is an open-source software platform that allows for GPU-accelerated computing on AMD GPUs, particularly for high-performance computing and AI workloads). ROCm 7 features improved support for industry-standard frameworks, expanded hardware compatibility, and new development tools, drivers, APIs and libraries to accelerate AI development and deployment.

In her keynote, Su said, “Opennesss should be more than just a buzz word.”

The Instinct MI350 Series exceeded AMD’s five-year goal to improve the energy efficiency of AI training and high-performance computing nodes by 30 times, ultimately delivering a 38 times improvement. AMD also unveiled a new 2030 goal to deliver a 20 times increase in rack-scale energy efficiency from a 2024 base year, enabling a typical AI model that today requires more than 275 racks to be trained in fewer than one fully utilized rack by 2030, using 95% less electricity.

AMD also announced the broad availability of the AMD Developer Cloud for the global developer and open-source communities. Purpose-built for rapid, high-performance AI development, users will have access to a fully managed cloud environment with the tools and flexibility to get started with AI projects – and grow without limits. With ROCm 7 and the AMD Developer Cloud, AMD is lowering barriers and expanding access to next-gen compute. Strategic collaborations with leaders like Hugging Face, OpenAI and Grok are proving the power of co-developed, open solutions. The announcement got some cheers from folks in the audience, as the company said it would give attendees developer credits.

Broad Partner Ecosystem Showcases AI Progress Powered by AMD

AMD customers discussed how they are using AMD AI solutions to train today’s leading AI models, power inference at scale and accelerate AI exploration and development.

Meta detailed how it has leveraged multiple generations of AMD Instinct and Epyc solutions across its data center infrastructure, with Instinct MI300X broadly deployed for Llama 3 and Llama 4 inference. Meta continues to collaborate closely with AMD on AI roadmaps, including plans to leverage MI350 and MI400 Series GPUs and platforms.

Oracle Cloud Infrastructure is among the first industry leaders to adopt the AMD open rack-scale AI infrastructure with AMD Instinct MI355X GPUs. OCI leverages AMD CPUs and GPUs to deliver balanced, scalable performance for AI clusters, and announced it will offer zettascale AI clusters accelerated by the latest AMD Instinct processors with up to 131,072 MI355X GPUs to enable customers to build, train, and inference AI at scale.

Microsoft announced Instinct MI300X is now powering both proprietary and open-source models in production on Azure.

HUMAIN discussed its landmark agreement with AMD to build open, scalable, resilient and cost-efficient AI infrastructure leveraging the full spectrum of computing platforms only AMD can provide.Cohere shared that its high-performance, scalable Command models are deployed on Instinct MI300X, powering enterprise-grade LLM inference with high throughput, efficiency and data privacy.

In the keynote, Red Hat described how its expanded collaboration with AMD enables production-ready AI environments, with AMD Instinct GPUs on Red Hat OpenShift AI delivering powerful, efficient AI processing across hybrid cloud environments.

“They can get the most out of the hardware they’re using,” said the Red Hat exec on stage.

Astera Labs highlighted how the open UALink ecosystem accelerates innovation and delivers greater value to customers and shared plans to offer a comprehensive portfolio of UALink products to support next-generation AI infrastructure.Marvell joined AMD to share the UALink switch roadmap, the first truly open interconnect, bringing the ultimate flexibility for AI infrastructure.

Be the first to comment